Privacy Grade for Apps

Introduction

Personal devices are ubiquitous. We carry them around everywhere we go and give them unprecedented access to our private/sensitive information. Where we work, live & sleep, etc. Tech giants like Google and Facebook have found ways to aggregate this information and sell it to different companies in search of potential customers. Data collection is a big part of this process. Google with its Android ecosystem is in a unique position to control this flow of data between our devices and its servers. Apple in its part controls the same with its iOS platform. On top of these platforms, third-party developers publish different apps that collect their copy of our private information and accumulate it on their own servers (E.g. Facebook) or send it to Advertising companies. To make matters worse, data broker companies aggregate data from various sources and sell back “enhanced” data to customers looking to advertise their products.

In this article, I will review how data is collected, categorized, and processed on our mobile devices along with the permission model needed to access it. We will mostly focus on Android but most of the concepts hold for iOS unless specified otherwise.

Current model & safeguards

Obtaining sensitive information can be achieved by various means.

- Asking users - Apps ask users to provide email, phone, name or other information via form like UIs. The user is aware of the implication behind these forms and willingly provide them with less suspicion

- Request OS level permission - Some device level operations such as location, camera & microphone will need to go through the OS. The OS requests these permissions on the app’s behalf. The assumption behind this model is - user understands the risk associated with granting such permissions. In reality, users skim through such dialogs and are in the habit of pressing “Ok” for the sake of completing what’s required on the screen

- Inference - Not all APIs are protected by permissions. This opens up the possibility for apps to collect device level properties to build a fingerprint model to attribute a user with his/her physical device. This allows advertisers to track the user across apps and eases the process of attribution

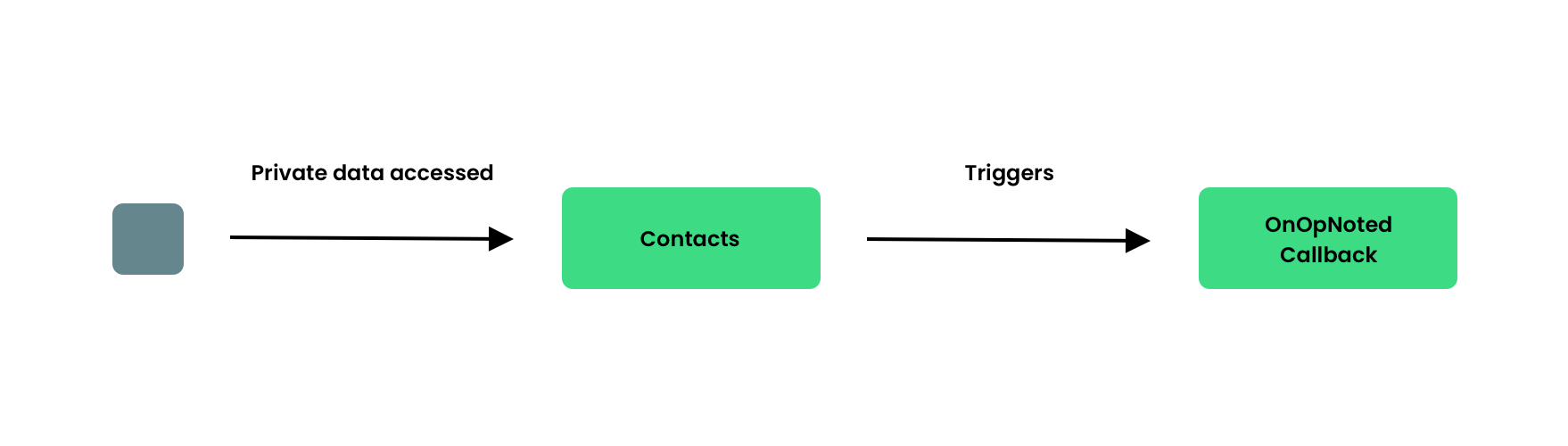

Over the years, Google has been reviewing how permissions work. It began by granting app permissions at install time. Users are presented with list of permissions during installation. Once accepted, there is no way to revoke/revert such decisions. More recently, Google introduced the concept of runtime permissions allowing users the ability to grant/deny individual permissions during it’s lifecycle. With Android 11, Google released a set of APIs for Auditing permission usage giving much needed visibility into access behavior. https://developer.android.com/about/versions/11/privacy/data-access-auditing. This powerful API allows developers and sys integrators to assess permission usage more transparently.

Privacy grade

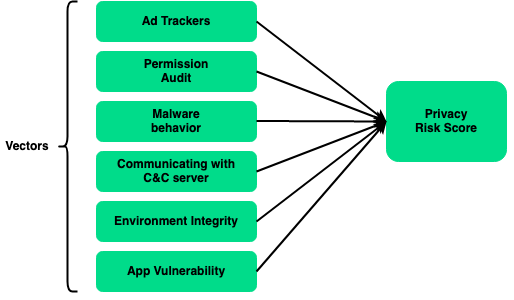

To evaluate the privacy risk associated with an app, we have to look at different parameters. The most important behavior is advertising tracker. How many trackers do an app have? How frequently are they contacted? Besides know trackers, (Google Ad mob, Facebook Audience network, etc), the app itself might collect and send it to its own servers. We can model these as third-party trackers. Another indicator is how often permissions are accessed and the context around its request. Is it appropriate for GPS tracking app to request contact permission? This also raises the question of vulnerability. Does the app contain loopholes for an attacker to exploit? Does the exploit allow him/her to export private data? Is the OS environment secure enough to shield private app data from begin accessed by malicious actors? i.e. is the device rooted/jailbroken?

Each evaluation vector will be graded out of 10 based on its severity impact. For example, if 10 trackers are sending sensitive info every 30 minutes, it will be assigned the worst possible score (0/10). The same will be done for the remaining vectors. Finally, we can sum up the scores to get an average score. The scoring can be enhanced by attaching a weight to individual vectors. Higher weight will influence the final score as compared to vectors with lower weight

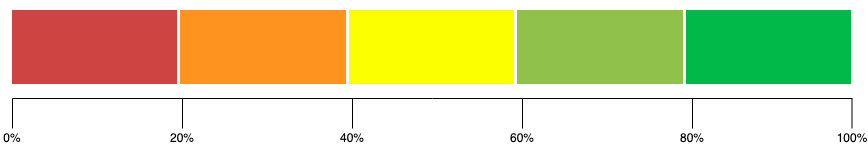

A score above 80% will be considered the safest while scores between 0-40% will need to be scrutinized further because its privacy practice might be questionable.

Implementation

Implementing this system requires an app to be analyzed both statically and dynamically. Dynamic analysis will reveal the app’s traffic and the possible presence of ad trackers. Other vectors are harder to evaluate. For example, to audit permission, we can inspect the app’s manifest entries but the frequency of access and context will need to be determined by obtaining device logs (Running app on a rooted device) or by injecting monitoring code inside the app. Even though it’s relatively easy to do this for one app, automating for larger set of apps will be a challenge. I will be working on a POC version of this system which I will be sharing in the coming weeks. Stay tuned.